Ai Guardrails How To Build Safe Enterprise Generative Ai Solu

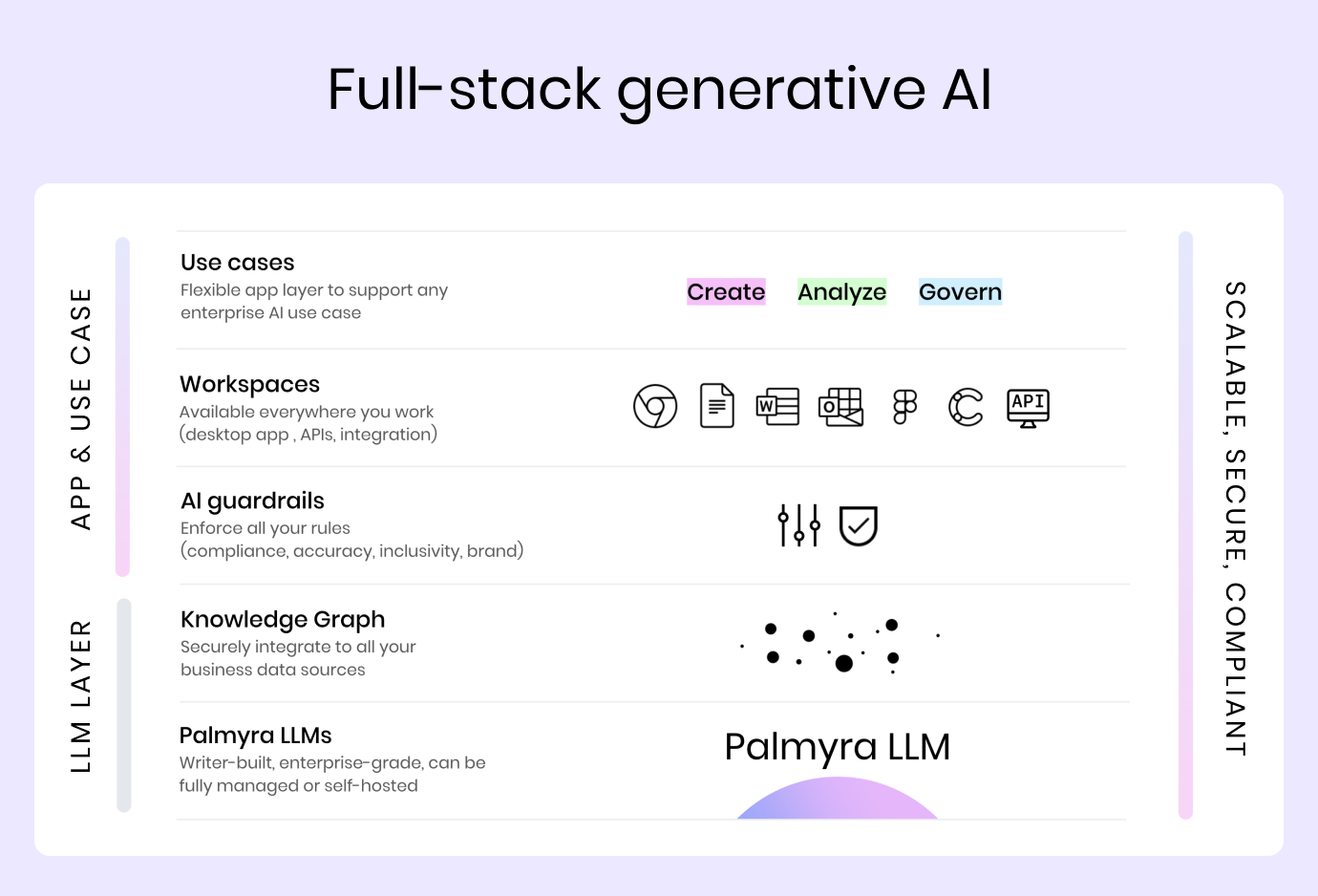

Ai Guardrails How To Build Safe Enterprise Generative Ai S Ai guardrails: how to build safe enterprise generative ai solutions, from day one. kevin wei. in the early 20th century, society was introduced to the automobile. initially a novelty, cars rapidly became essential to everyday life — altering landscapes, economies, and behaviors. however, with this innovation came new risks: accidents. The following figure illustrates the shared responsibility and layered security for llm safety. by working together and fulfilling their respective responsibilities, model producers and consumers can create robust, trustworthy, safe, and secure ai applications. in the next section, we look at external guardrails in more detail.

Enterprise Use Of Generative Ai Needs Guardrails Here S How To Build The company uses the gpt 4 family of gen ai models on the azure openai service, and decided to go with the guardrails available within that platform to build its rebot chatbot. By creating a unified view of data from multiple sources, data integration can serve as a system of checks and balances to reduce the limitations and inaccuracies produced by current generative ai. In that time, we’ve witnessed a cambrian explosion of ai tools, companies, and conversations, with generative ai projected to add $4.4 trillion to the global economy as automation boosts productivity. alongside this feverish activity, there is a deep need for guardrails built on legal, security, technological, and ethical considerations. The engineering team must consider below before opting or building for generative ai solutions. the output matches your enterprise data, pattern, and development practices.llm can’t hallucinate.

Ai Guardrails How To Build Safe Enterprise Generative Ai S In that time, we’ve witnessed a cambrian explosion of ai tools, companies, and conversations, with generative ai projected to add $4.4 trillion to the global economy as automation boosts productivity. alongside this feverish activity, there is a deep need for guardrails built on legal, security, technological, and ethical considerations. The engineering team must consider below before opting or building for generative ai solutions. the output matches your enterprise data, pattern, and development practices.llm can’t hallucinate. In short, guardrails are a way to keep a process in line with expectations. they allow us to build more security in our models and give more reliable results to the end user. today, many. Discover how generative ai is reshaping enterprises and the urgent need for organizations to navigate its risks. learn about the potential inaccuracies, harmful outputs, data leakage, ip infringement, and prompt injection attacks associated with generative ai. introducing genai guardrails, part of the credo ai responsible ai platform, enabling organizations to govern and mitigate risks.

Using Guardrails Ai For Building Generative Ai Apps Youtube In short, guardrails are a way to keep a process in line with expectations. they allow us to build more security in our models and give more reliable results to the end user. today, many. Discover how generative ai is reshaping enterprises and the urgent need for organizations to navigate its risks. learn about the potential inaccuracies, harmful outputs, data leakage, ip infringement, and prompt injection attacks associated with generative ai. introducing genai guardrails, part of the credo ai responsible ai platform, enabling organizations to govern and mitigate risks.

Comments are closed.