Apache Spark Rdd 101

Create First Rdd Resilient Distributed Dataset Apache Spark 101 Java. spark 3.5.2 works with python 3.8 . it can use the standard cpython interpreter, so c libraries like numpy can be used. it also works with pypy 7.3.6 . spark applications in python can either be run with the bin spark submit script which includes spark at runtime, or by including it in your setup.py as:. Quick start. this tutorial provides a quick introduction to using spark. we will first introduce the api through spark’s interactive shell (in python or scala), then show how to write applications in java, scala, and python. to follow along with this guide, first, download a packaged release of spark from the spark website.

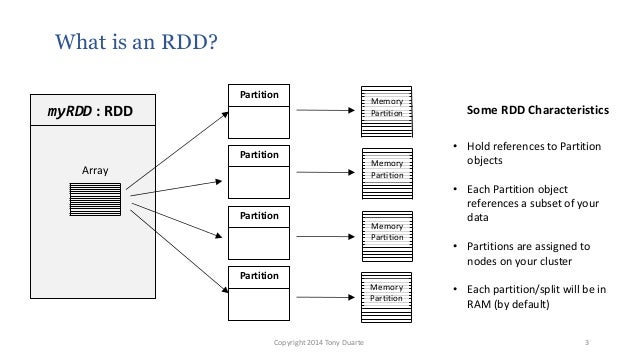

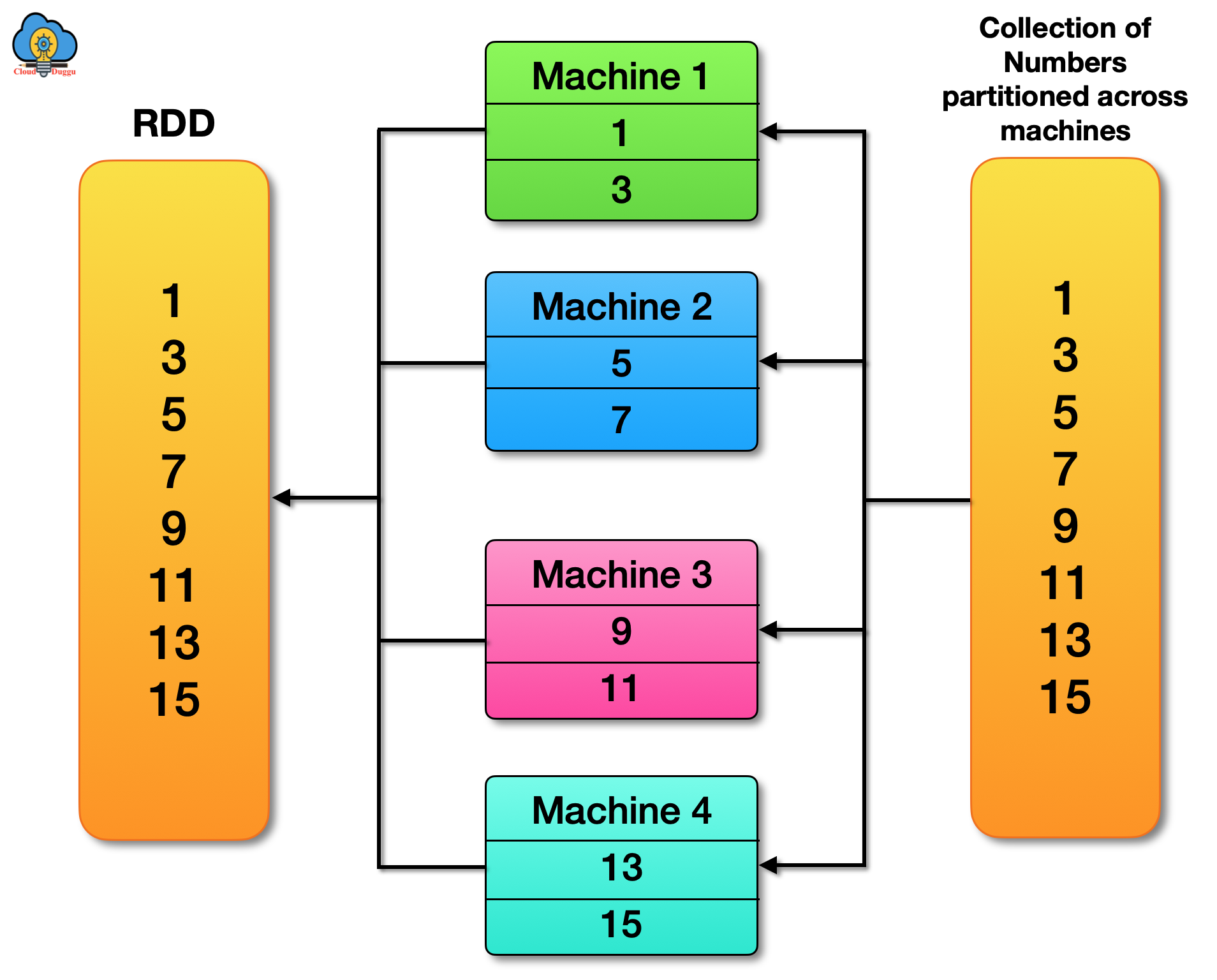

Apache Spark Rdd 101 An rdd (resilient distributed dataset) is a core data structure in apache spark, forming its backbone since its inception. it represents an immutable, fault tolerant collection of elements that can be processed in parallel across a cluster of machines. rdds serve as the fundamental building blocks in spark, upon which newer data structures like. Apache spark 3.5 is a framework that is supported in scala, python, r programming, and java. below are different implementations of spark. spark – default interface for scala and java. pyspark – python interface for spark. sparklyr – r interface for spark. examples explained in this spark tutorial are with scala, and the same is also. All. . resilient distributed dataset (rdd) rdd was the primary user facing api in spark since its inception. at the core, an rdd is an immutable distributed collection of elements of your data, partitioned across nodes in your cluster that can be operated in parallel with a low level api that offers transformations and actions. Is an open source analytics engine used for large scale data processing. it was developed at , especially for iterative algorithms and interactive data analysis. spark runs programs way faster—up to 100x quicker—than hadoop mapreduce, thanks to its in memory processing. plus, it can run on disk, making it a great choice for a variety of.

Apache Spark Rdd Introduction Tutorial Cloudduggu All. . resilient distributed dataset (rdd) rdd was the primary user facing api in spark since its inception. at the core, an rdd is an immutable distributed collection of elements of your data, partitioned across nodes in your cluster that can be operated in parallel with a low level api that offers transformations and actions. Is an open source analytics engine used for large scale data processing. it was developed at , especially for iterative algorithms and interactive data analysis. spark runs programs way faster—up to 100x quicker—than hadoop mapreduce, thanks to its in memory processing. plus, it can run on disk, making it a great choice for a variety of. Spark interfaces. there are three key spark interfaces that you should know about. resilient distributed dataset (rdd) apache spark’s first abstraction was the rdd. it is an interface to a sequence of data objects that consist of one or more types that are located across a collection of machines (a cluster). Rdds are an immutable, resilient, and distributed representation of a collection of records partitioned across all nodes in the cluster. in spark programming, rdds are the primordial data structure. datasets and dataframes are built on top of rdd. spark rdds are presented through an api, where the dataset is represented as an object, and with.

Apache Spark Rdd 101 Youtube Spark interfaces. there are three key spark interfaces that you should know about. resilient distributed dataset (rdd) apache spark’s first abstraction was the rdd. it is an interface to a sequence of data objects that consist of one or more types that are located across a collection of machines (a cluster). Rdds are an immutable, resilient, and distributed representation of a collection of records partitioned across all nodes in the cluster. in spark programming, rdds are the primordial data structure. datasets and dataframes are built on top of rdd. spark rdds are presented through an api, where the dataset is represented as an object, and with.

Comments are closed.