Fine Tuning An Llm In Snowpark Container Services With Autotrain

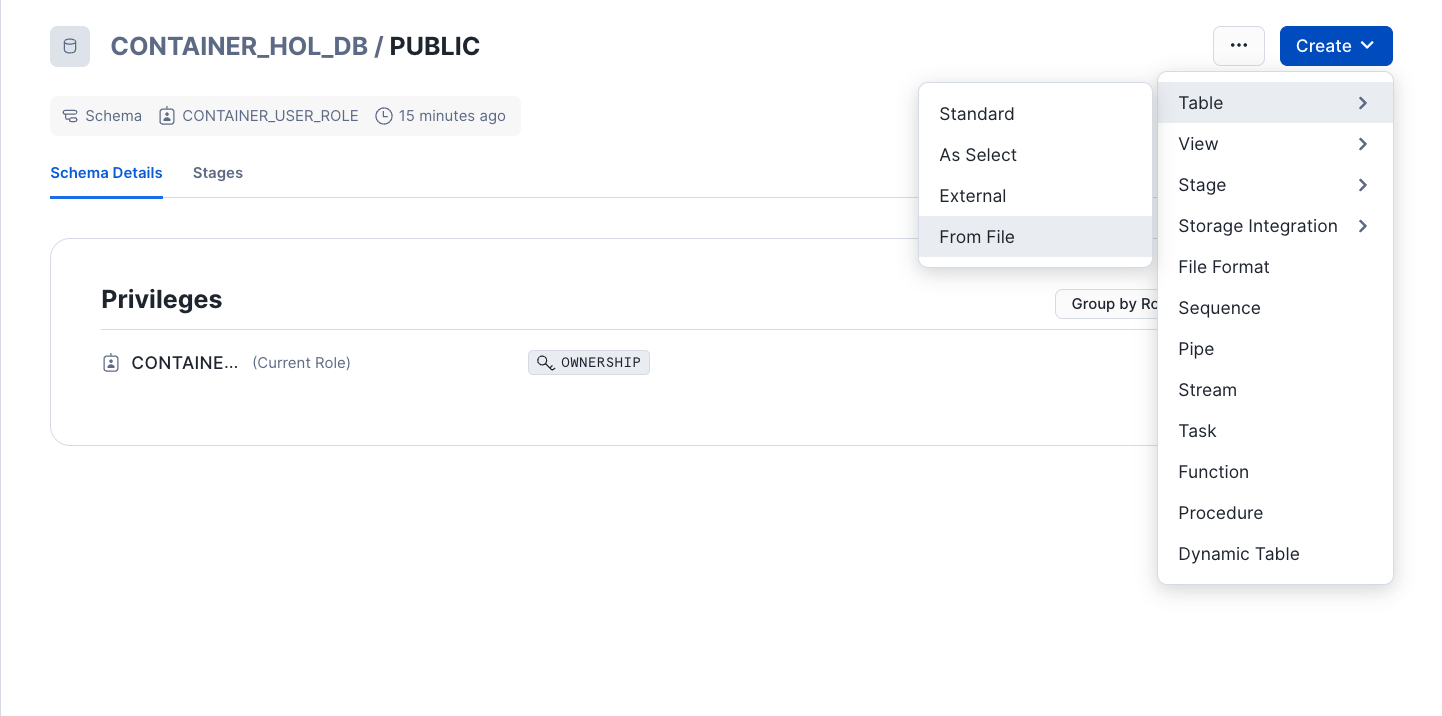

Fine Tuning An Llm In Snowpark Container Services With Autotrain Fine tuning can be a rather complex process. to alleviate much of this complexity, we will be running huggingface's autotrain advanced python package in the containerized service to securely fine tune an llm using snowflake compute. autotrain advanced will use pairs of inputs and outputs to fine tune an open source llm to make its outputs. Snowflake announced snowpark container services (spcs) in 2023, enabling virtually any workload to run securely in snowflake. spcs also provides gpu compute, which is critical for those tasked with….

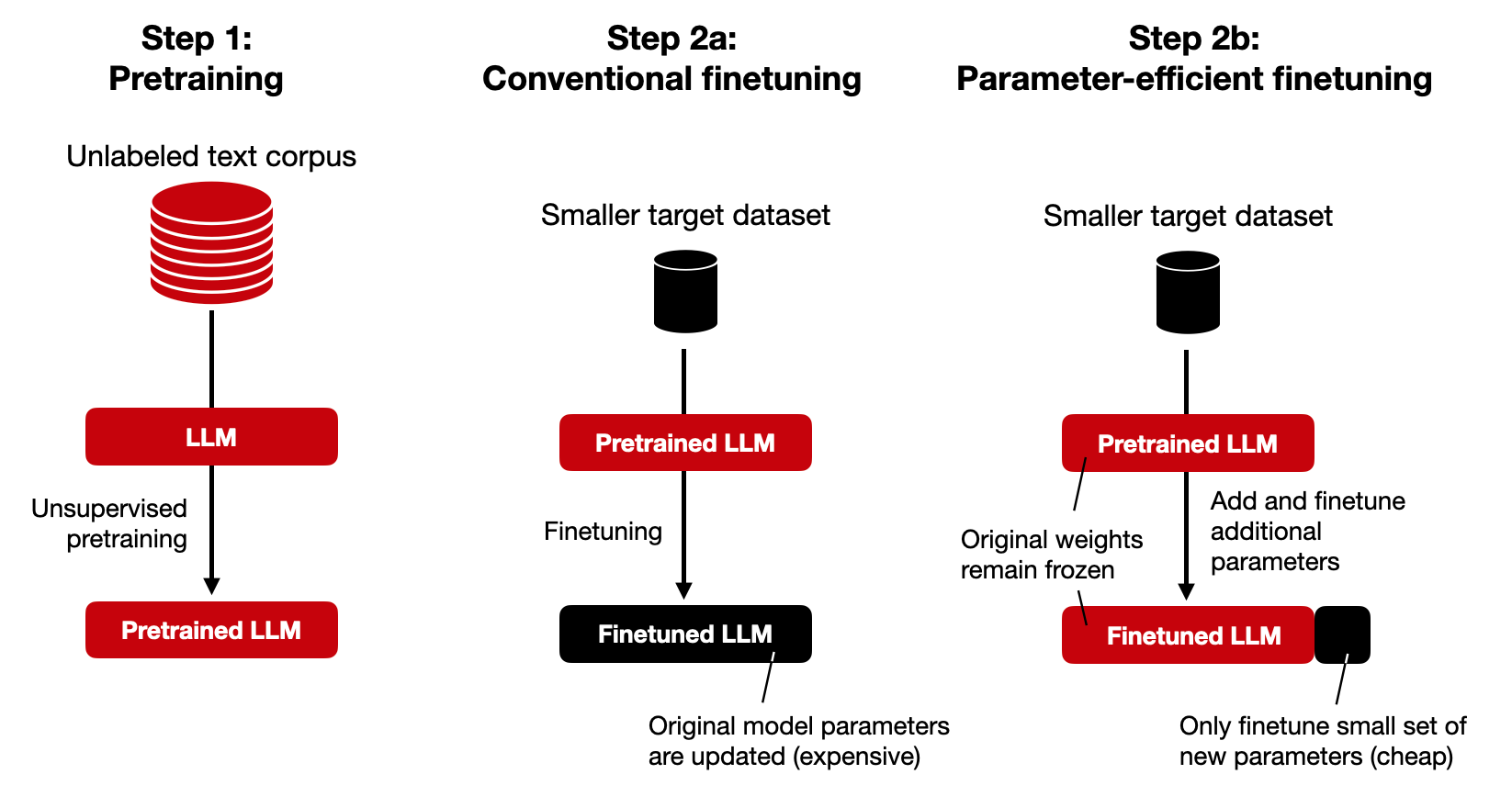

Finetuning Llms Efficiently With Adapters Overview. in this guide, we will fine tune a large language model (llm) on product metadata securely in snowpark container services using autotrain advanced from huggingface. by the end of the session, you will have an llm trained to describe product metadata and an interactive web application to prompt the fine tuned llm for evaluation. We were able to leverage snowpark container services (currently in private preview) to set up a development environment with jupyterlab that would allow us to bring in and tune an llm from. Autotrain revolutionizes the process of fine tuning large language models (llms) by providing an automated, code minimal approach. this guide offers a comprehensive walkthrough on leveraging autotrain to create powerful ai models, covering everything from installation to advanced fine tuning techniques. Powering the latest llm innovation, llama v2 in snowflake, part 1. this blog series covers how to run, train, fine tune, and deploy large language models securely inside your snowflake account with snowpark container services. this year there has been a surge of progress in the world of open source large language models (llms).

Using Lamini To Fine Tune Llms In Snowflake With Snowpark Container Autotrain revolutionizes the process of fine tuning large language models (llms) by providing an automated, code minimal approach. this guide offers a comprehensive walkthrough on leveraging autotrain to create powerful ai models, covering everything from installation to advanced fine tuning techniques. Powering the latest llm innovation, llama v2 in snowflake, part 1. this blog series covers how to run, train, fine tune, and deploy large language models securely inside your snowflake account with snowpark container services. this year there has been a surge of progress in the world of open source large language models (llms). Register, log and deploy llama 2 into snowpark container services. run cells under this section to register, log and deploy the llama 2 base model into spcs. if all goes well, you should see cell output similar to the following showing the modelreference object: deploying model for the first time can take ~25 30mins. Solution architecture: fine tuning llms using snowpark container services and amazon bedrock. the user interacts with the streamlit app and provides a prompt and or parameters. the streamlit app receives those prompts and accesses relevant data from snowflake. the app passes the prompts from the user and the snowflake data to a bedrock model.

Comments are closed.