Fine Tuning Large Language Models Llms W Example Code

Finetuning Large Language Models 👉 cxos, vps, & directors i offer custom ai workshops: shawhintalebi ai workshopsthis is the 5th video in a series on using large language. In the context of the phi 2 model, these modules are used to fine tune the model for instruction following tasks. the model can learn to understand better and respond to instructions by fine tuning these modules. by doing so, the results are relatively less performing than mistral instruct but better than llama and with a much smaller model:.

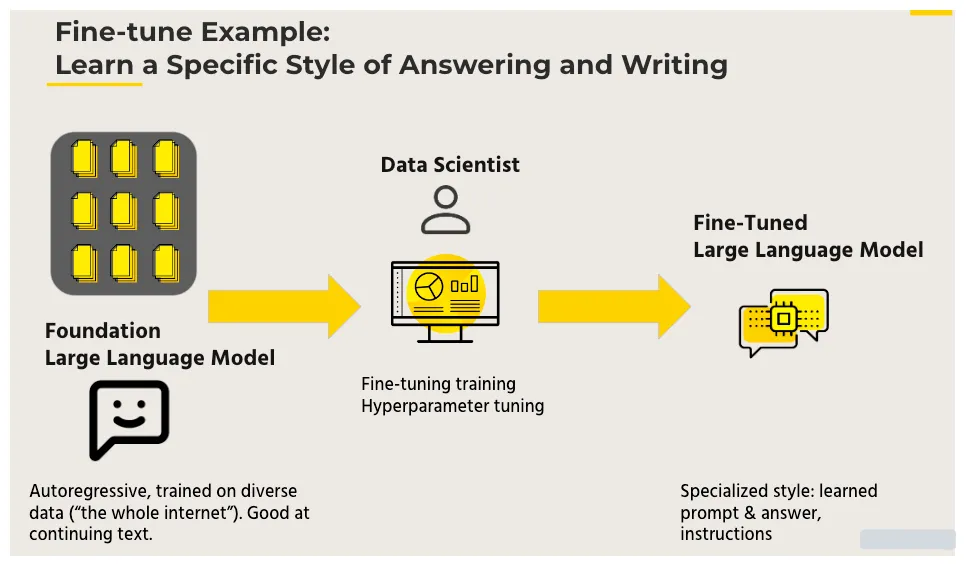

Everything You Need To Know About Fine Tuning Of Llms Fine tuning llm involves the additional training of a pre existing model, which has previously acquired patterns and features from an extensive dataset, using a smaller, domain specific dataset. in the context of “llm fine tuning,” llm denotes a “large language model,” such as the gpt series by openai. this approach holds significance. Fine tuning: fine tuning a model refers to the process of taking a pre trained model (model trained on some big, public corpus) and further training it on a new, smaller dataset or with a specific. 4. this is the 5th article in a series on using large language models (llms) in practice. in this post, we will discuss how to fine tune (ft) a pre trained llm. we start by introducing key ft concepts and techniques, then finish with a concrete example of how to fine tune a model (locally) using python and hugging face’s software ecosystem. 1. large language models (llms): trained using massive datasets and models with a large number of parameters (e.g., gpt 3 with 175b parameters). commonly known as foundational models.

Fine Tuning Large Language Models Llms W Example Code Youtube 4. this is the 5th article in a series on using large language models (llms) in practice. in this post, we will discuss how to fine tune (ft) a pre trained llm. we start by introducing key ft concepts and techniques, then finish with a concrete example of how to fine tune a model (locally) using python and hugging face’s software ecosystem. 1. large language models (llms): trained using massive datasets and models with a large number of parameters (e.g., gpt 3 with 175b parameters). commonly known as foundational models. Here's a basic example of fine tuning a model for sequence classification: step 1: choose a pre trained model and a dataset. to fine tune a model, we always need to have a pre trained model in mind. in our case, we are going to perform some simple fine tuning using gpt 2. screenshot of hugging face datasets hub. selecting openai’s gpt2 model. Finetuning large language models (local machine) and finetuning large language models (colab) are included numerous extra comments, slides and explanations based on the provided information during the training by the lecturers. scripts : a repository of utility scripts, including those for data preprocessing and arc evaluation functions.

Comments are closed.