Lecture 10 4

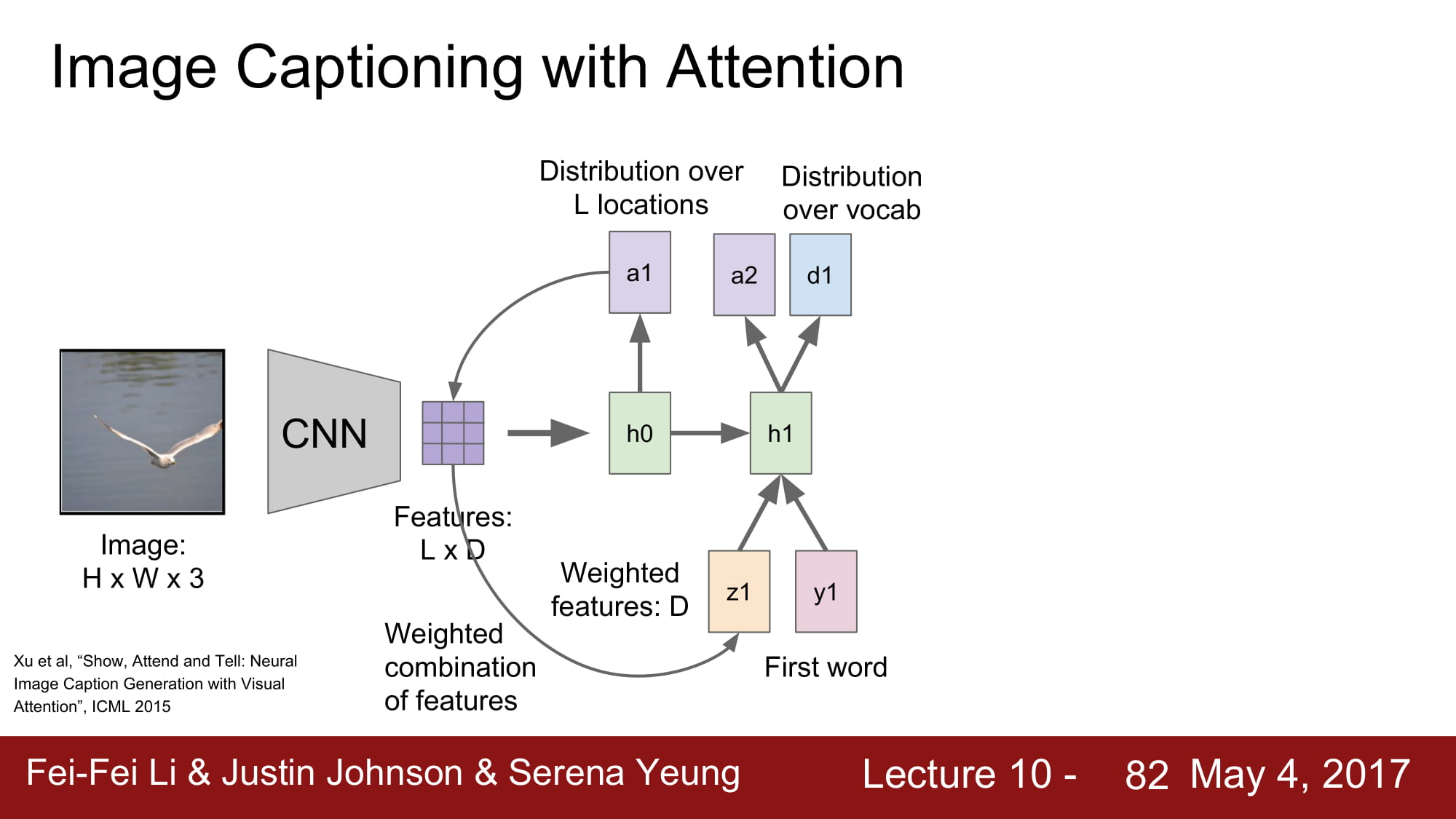

Lecture 10 4 Pages Per Sheet Lecture 10 Mat1114 Introductory Lecture 10 21 may 4, 2017 recurrent neural network x rnn y we can process a sequence of vectors x by applying a recurrence formula at every time step: notice: the same function and the same set of parameters are used at every time step. L through lecture 10 (thu april 29).tues, y 4 and is worth 15% of your grade.available for 24 hours on gradescope from ma. pdt.3 hour consecutive timeframe. exam will be designed for 1.5 hours. open book and open internet but no collaboration. only make private posts during those 24 hours.

Solution Lecture 10 4 Basic Array Example Iterating Through An Array Lecture 10 introduction to machine learning dennis sun stanford university datasci 112 february 2, 2024 1. Csc321 lecture 10 training rnns. february 23, 2015 last time, we saw that rnns can perform some interesting computations. now let's look at how to train them. we'll use \backprop through time," which is really just backprop. there are only 2 new ideas we need to think about: weight constraints exploding and vanishing gradients first, some. Lecture video. lewis structures are simplistic views of molecular structure. they are based on the idea that the key to covalent bonding is electron sharing and having each atom achieve a noble gas electron configuration. lewis structures correctly predict electron configurations around atoms in molecules about 90% of the time. Left nullspace. the matrix at has m columns. we just saw that r is the rank of at, so the number of free columns of at must be m − r: dim n(at) = m − r. the left nullspace is the collection of vectors y for which aty = 0. equiva lently, yt a = 0; here y and 0 are row vectors. we say “left nullspace” because yt is on the left of a in.

Lecture 10 4 2 Pdf Quantitative Methods In Finance Lecture 10 Lecture video. lewis structures are simplistic views of molecular structure. they are based on the idea that the key to covalent bonding is electron sharing and having each atom achieve a noble gas electron configuration. lewis structures correctly predict electron configurations around atoms in molecules about 90% of the time. Left nullspace. the matrix at has m columns. we just saw that r is the rank of at, so the number of free columns of at must be m − r: dim n(at) = m − r. the left nullspace is the collection of vectors y for which aty = 0. equiva lently, yt a = 0; here y and 0 are row vectors. we say “left nullspace” because yt is on the left of a in. Lecture 10: the four fundamental subspaces. for some vectors b the equation ax = b has solutions and for others it does not. some vectors x are solutions to the equation ax = 0 and some are not. to understand these equations we study the column space, nullspace, row space and left nullspace of the matrix a. Cs107, lecture 10 introduction to assembly reading: b&o 3.1 3.4. 2 course overview 1.bits and bytes how can a computer represent integer numbers?.

Cs231n Lecture 10 4 Recurrent Neural Networks Strutive07 лё лўњк ё Lecture 10: the four fundamental subspaces. for some vectors b the equation ax = b has solutions and for others it does not. some vectors x are solutions to the equation ax = 0 and some are not. to understand these equations we study the column space, nullspace, row space and left nullspace of the matrix a. Cs107, lecture 10 introduction to assembly reading: b&o 3.1 3.4. 2 course overview 1.bits and bytes how can a computer represent integer numbers?.

Comments are closed.