Load Data From Different Folders In Same S3 Bucket Into Different

Load Data From Different Folders In Same S3 Bucket Into Different I have been on the lookout for a tool to help me copy content of an aws s3 bucket into a second aws s3 bucket without downloading the content first to the local file system. i have tried to use the aws s3 console copy option but that resulted in some nested files being missing. i have tried to use transmit app (by panic). Method 1: via aws cli (most easy) download and install awscli on ur instance, i am using here windows (64 bit link) and run "asw configure" to fill up your configuration and just run this single command on cmd. here cp for copy and recursive to copy all files. method 2: via aws cli using python.

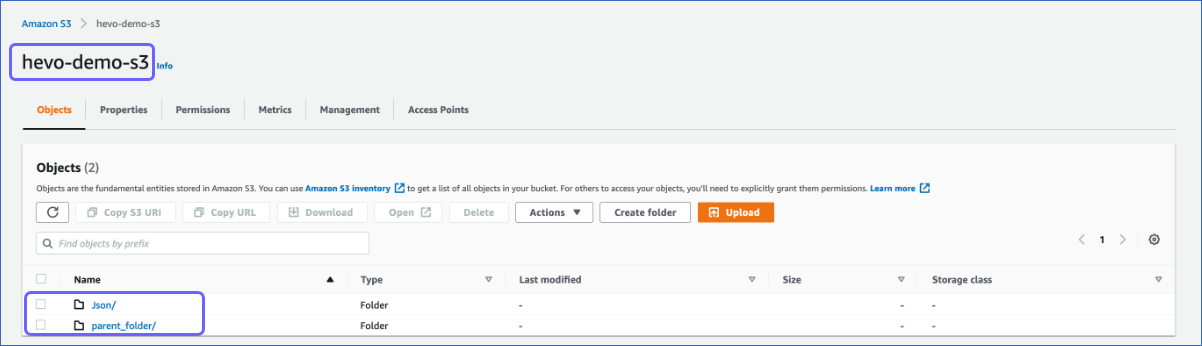

How Can I Load Different Folders Of An Amazon S3 Bucket As Separate Step 1: compare two amazon s3 buckets. to get started, we first compare the objects in the source and destination buckets to find the list of objects that you want to copy. step 1a. generate s3 inventory for s3 buckets. configure amazon s3 inventory to generate a daily report on both buckets. Copy from amazon s3. pdf rss. to load data from files located in one or more s3 buckets, use the from clause to indicate how copy locates the files in amazon s3. you can provide the object path to the data files as part of the from clause, or you can provide the location of a manifest file that contains a list of amazon s3 object paths. To copy the files from a local folder to an s3 bucket, run the s3 sync command, passing it the source directory and the destination bucket as inputs. let's look at an example that copies the files from the current directory to an s3 bucket. open your terminal in the directory that contains the files you want to copy and run the s3 sync command. Create an amazon s3 trigger for the lambda function. complete the following steps: open the functions page in the lambda console. in functions, choose the lambda function. in function overview, choose add trigger. from the trigger configuration dropdown list, choose s3. in bucket, enter the name of your source bucket.

How Can I Load Different Folders Of An Amazon S3 Bucket As Separate To copy the files from a local folder to an s3 bucket, run the s3 sync command, passing it the source directory and the destination bucket as inputs. let's look at an example that copies the files from the current directory to an s3 bucket. open your terminal in the directory that contains the files you want to copy and run the s3 sync command. Create an amazon s3 trigger for the lambda function. complete the following steps: open the functions page in the lambda console. in functions, choose the lambda function. in function overview, choose add trigger. from the trigger configuration dropdown list, choose s3. in bucket, enter the name of your source bucket. Use aws datasync. to move large amounts of data from one amazon s3 bucket to another bucket, perform these steps: open the aws datasync console. create a task. create a new location for amazon s3. select your s3 bucket as the source location. update the source location configuration settings. Download data files that use comma separated value (csv), character delimited, and fixed width formats. create an amazon s3 bucket and then upload the data files to the bucket. launch an amazon redshift cluster and create database tables. use copy commands to load the tables from the data files on amazon s3.

Comments are closed.